informatik

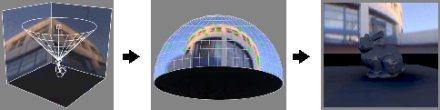

Acquired real world illumination -> approximation with light sources -> interactive rendering.

Our interactive system for dynamic scene lighting consists of three parts:

Our project can be generally applied in any system that uses the real world illumination in computer graphics and visualization. For instance it can be used in virtual studios for compositing computer generated objects into live video stream.

We present an interactive system for fully dynamic scene lighting using captured high dynamic range (HDR) video environment maps. The key component of our system is an algorithm for efficient decomposition of HDR video environment map captured over hemisphere into a set of representative directional light sources, which can be used for the direct lighting computation with shadows using graphics hardware. The resulting lights exhibit good temporal coherence and their number can be adaptively changed to keep a constant frame rate while good spatial distribution (stratification) properties are maintained. We can handle a large number of light sources with shadows using a novel technique which reduces the cost of BRDF-based shading and visibility computations. We demonstrate the use of our system in a mixed reality application in which real and synthetic objects are illuminated by consistent lighting at interactive frame rates.

The environment illumination is captured using a HDR camera with fish-eye lens which allows to acquire hemispherical image of the surrounding. The camera is photometrically calibrated and has the following parameters:

The environment map is approximated with a finite number of light sources using an importance sampling algorithm. The design goals for the light source computations are as follows:

All the properties are achieved by our algorithm. The description can be found in our technical paper EGSR'05 conference (see references).

The interactive rendering is achieved using a standard workstation with NVidia 6800GT graphics card. The system is implemented in OpenGL and the key parts of the algorithm are coded in the pixel shader. The implementation allows for rendering with arbitrary number of light sources using shadow maps. The quality of rendering of glossy surfaces is improved via various sampling enhancements (see paper for details). The performance have been optimized through introduction of several techniques including the clustering of light sources for uneven illumination and elimination of invisible light sources. All the principles we suggest can also be used for off-line (CPU-based) renderers.

The following snapshots were taken from our system as real-time screen captures. The top left corner image shows fish-eye view as taken by camera. The top right corner image shows longitude-latitude projection of the environment map. The green dots correspond to the directional light sources which represent the approximation of the acquired illumination. The right image shows the result from rendering using the computed directional light sources.

As a proof of concept, we conducted an experiment in the photo studio with controlled illumination conditions. The model of two angels visible on the table is interactively rendered on the screen to the left. The HDR camera is located in front of the white table. Notice that the shadows and surface illumination visible in 'reality' is finely reproduced on the screen using our rendering system.

Interactive illumination of a synthetic object using the illumination acquired while driving through the university campus. See the video material for the full sequence.

Illumination of synthetic objects with indoor environment. We consider two scenarios: artificial illumination and daylight.

The following video material demonstrates the performance of our system. The video clips have been captured in real-time using a digital frame grabber.

Interactive System for Dynamic Scene Lighting using Captured Video Environment Maps

V. Havran, M. Smyk. G. Krawczyk, K. Myskowski, H.-P. Seidel

In: Proc. of Eurographics Symposium on Rendering 2005.

[bibtex]

[paper]

Importance Sampling for Video Environment Maps

V. Havran, M. Smyk. G. Krawczyk, K. Myskowski, H.-P. Seidel

Technical Sketch at SIGGRAPH 2005.

[bibtex]

[slides]

Goniometric Diagram Mapping for Hemisphere

V. Havran, K. Dmitriev, H.-P. Seidel

In: Proc. of Eurographics 2003, Short Presentations Section.

[bibtex]

[paper]

[slides]

Photometric Calibration of High Dynamic Range Cameras

G. Krawczyk, M. Goesele, H.-P. Seidel

In: MPI Research Report MPI-I-2005-4-005.

[bibtex]

[report]

[poster]

Comments and suggestions related to the project are very welcome. For further information please contact one of the authors: Vlastimil Havran, Grzegorz Krawczyk, or Karol Myszkowski.