Minimal Warping: Planning Incremental Novel-view Synthesis

Computer Graphics Forum (Proc. EGSR)

Computer Graphics Forum (Proc. EGSR)

Minimal Warping: Planning Incremental Novel-view Synthesis

Thomas Leimkühler1

Hans-Peter Seidel1

Tobias Ritschel2

1 MPI Informatik

2 University College London

Abstract

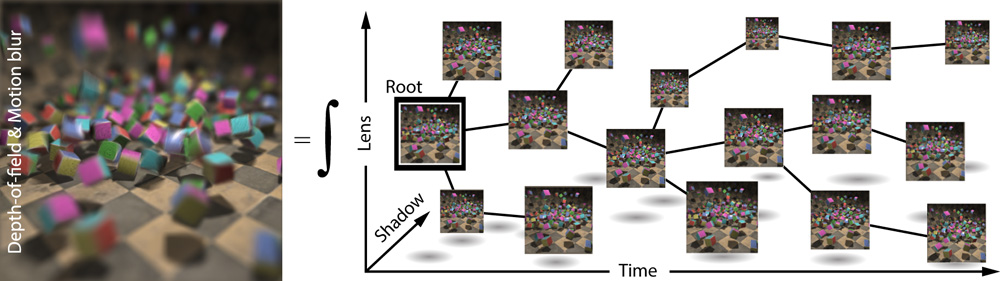

Observing that many visual effects (depth-of-field, motion blur, soft shadows, spectral effects) and several sampling modalities (time, stereo or light fields) can be expressed as a sum of many pinhole camera images, we suggest a novel efficient image synthesis framework that exploits coherency among those images. We introduce the notion of “distribution flow” that represents the 2D image deformation in response to changes in the high-dimensional time-, lens-, area light-, spectral-, etc. coordinates. Our approach plans the optimal traversal of the distribution space of all required pinhole images, such that starting from one representative root image, which is incrementally changed (warped) in a minimal fashion, pixels move at most by one pixel, if at all. The incremental warping allows extremely simple warping code, typically requiring half a millisecond on an Nvidia Geforce GTX 980Ti GPU per pinhole image. We show, how the bounded sampling does introduce very little errors in comparison to re-rendering or a common warping-based solution. Our approach allows efficient previews for arbitrary combinations of distribution effects and imaging modalities with little noise and high visual fidelity.

Video

Materials

|

© 2017 The Authors. This is the authors' version of the work. It is posted here for personal use, not for redistribution.

Citation

Thomas Leimkühler, Hans-Peter Seidel, Tobias Ritschel

Minimal Warping: Planning Incremental Novel-view Synthesis

Computer Graphics Forum (Proc. EGSR) (to appear)

@article{Leimkuehler2017EGSR,

author = {Thomas Leimk\"uhler and Hans-Peter Seidel and Tobias Ritschel},

title = {Minimal Warping: Planning Incremental Novel-view Synthesis},

journal = {Computer Graphics Form (Proc. EGSR)},

year = {2017},

volume = {36},

number = {4},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

}Acknowledgements

This work was partly supported by the Fraunhofer and the Max Planck cooperation program within the framework of the German pact for research and innovation (PFI).